有太多小伙伴问David 9关于Tensorboard的入门了,

我们都知道tensorflow训练一般分两步走:第一步构建流图graph,第二步让流图真正“流”起来(即进行流图训练)。

tensorboard会对这两步都进行跟踪,启动这种跟踪你必须先初始化一个tensorflow的log文件writer对象:

writer = tf.train.SummaryWriter(logs_path, graph=tf.get_default_graph())

然后启动tensorboard服务:

[root@c031 mnist]# tensorboard --logdir=/tmp/mnist/2 TensorBoard 1.5.1 at http://c031:6006 (Press CTRL+C to quit)

即可看到你定义的流图:

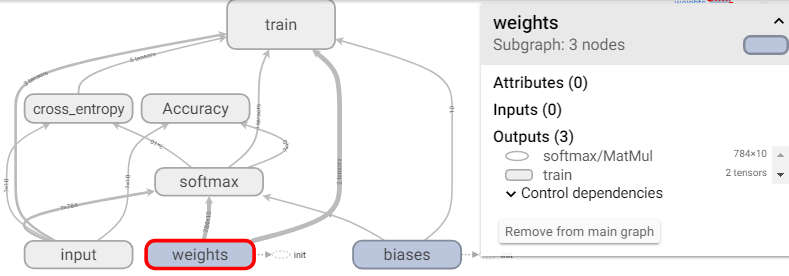

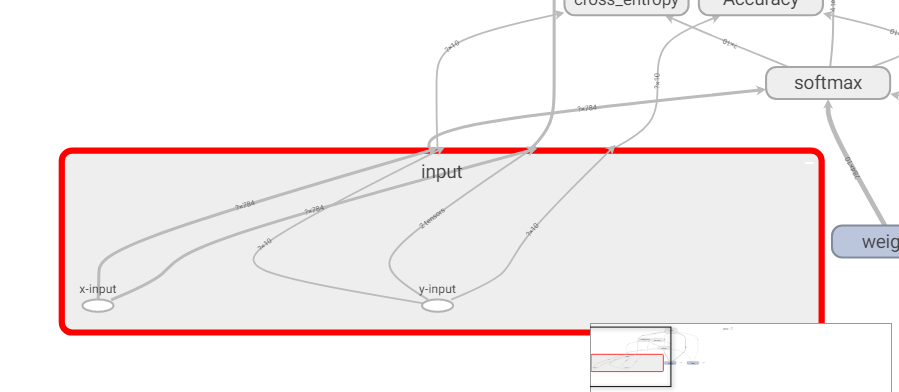

GRAPHS中的每个方框代表tensorflow代码中的scope作用域,比如上图就定义了7个作用域:train, cross_entropy, Accuracy, softmax, input, weights, biases. 每个作用域下都可能有一些Variable或者计算操作的Tensor,可以对方框双击鼠标放大:

上图可见,input的scope下有两个placeholder:x-input和y-input.

上图可见,input的scope下有两个placeholder:x-input和y-input.

对应的流图定义代码如下:

with tf.name_scope('input'):

# None -> batch size can be any size, 784 -> flattened mnist image

x = tf.placeholder(tf.float32, shape=[None, 784], name="x-input")

# target 10 output classes

y_ = tf.placeholder(tf.float32, shape=[None, 10], name="y-input")

当然,截止目前,我们看到的可视化都是静态的流图可视化。

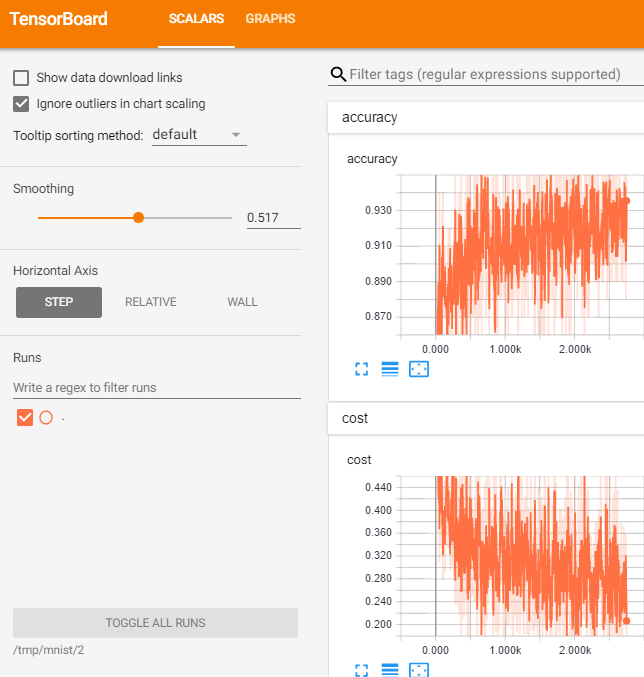

我们更多需要的是流图训练过程中动态log,现在还没有动态scalars(标量值)数据。所以我们可以定义一些log summary的操作(下面是对cost和accuracy标量打log):

# create a summary for our cost and accuracy

tf.summary.scalar("cost", cross_entropy)

tf.summary.scalar("accuracy", accuracy)

定义完成后,我们不需要逐条执行上述操作,只需用merge操作一并执行:

summary_op = tf.summary.merge_all()

最后在流图真正流动训练的时候,记得执行,并写入上述操作到log中:

# perform the operations we defined earlier on batch

_, summary = sess.run([train_op, summary_op], feed_dict={x: batch_x, y_: batch_y})

# write log

writer.add_summary(summary, epoch * batch_count + i)

add_summary 的第二项是scalar图标坐标中的x轴的值,summary对象计算出的标量是y轴的值:

如果需要复现,可以使用以下完整代码:

import tensorflow as tf

# reset everything to rerun in jupyter

tf.reset_default_graph()

# config

batch_size = 100

learning_rate = 0.5

training_epochs = 5

logs_path = "/tmp/mnist/2"

# load mnist data set

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

# input images

with tf.name_scope('input'):

# None -> batch size can be any size, 784 -> flattened mnist image

x = tf.placeholder(tf.float32, shape=[None, 784], name="x-input")

# target 10 output classes

y_ = tf.placeholder(tf.float32, shape=[None, 10], name="y-input")

# model parameters will change during training so we use tf.Variable

with tf.name_scope("weights"):

W = tf.Variable(tf.zeros([784, 10]))

# bias

with tf.name_scope("biases"):

b = tf.Variable(tf.zeros([10]))

# implement model

with tf.name_scope("softmax"):

# y is our prediction

y = tf.nn.softmax(tf.matmul(x,W) + b)

# specify cost function

with tf.name_scope('cross_entropy'):

# this is our cost

cross_entropy = tf.reduce_mean(-tf.reduce_sum(y_ * tf.log(y), reduction_indices=[1]))

# specify optimizer

with tf.name_scope('train'):

# optimizer is an "operation" which we can execute in a session

train_op = tf.train.GradientDescentOptimizer(learning_rate).minimize(cross_entropy)

with tf.name_scope('Accuracy'):

# Accuracy

correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# create a summary for our cost and accuracy

tf.summary.scalar("cost", cross_entropy)

tf.summary.scalar("accuracy", accuracy)

# merge all summaries into a single "operation" which we can execute in a session

summary_op = tf.summary.merge_all()

with tf.Session() as sess:

# variables need to be initialized before we can use them

sess.run(tf.initialize_all_variables())

# create log writer object

writer = tf.summary.FileWriter(logs_path, graph=tf.get_default_graph())

# perform training cycles

for epoch in range(training_epochs):

# number of batches in one epoch

batch_count = int(mnist.train.num_examples/batch_size)

for i in range(batch_count):

batch_x, batch_y = mnist.train.next_batch(batch_size)

# perform the operations we defined earlier on batch

_, summary = sess.run([train_op, summary_op], feed_dict={x: batch_x, y_: batch_y})

# write log

writer.add_summary(summary, epoch * batch_count + i)

if epoch % 5 == 0:

print "Epoch: ", epoch

print "Accuracy: ", accuracy.eval(feed_dict={x: mnist.test.images, y_: mnist.test.labels})

print "done"

参考文献:

本文采用署名 – 非商业性使用 – 禁止演绎 3.0 中国大陆许可协议进行许可。著作权属于“David 9的博客”原创,如需转载,请联系微信: david9ml,或邮箱:yanchao727@gmail.com

或直接扫二维码:

David 9

Latest posts by David 9 (see all)

- 修订特征已经变得切实可行, “特征矫正工程”是否会成为潮流? - 27 3 月, 2024

- 量子计算系列#2 : 量子机器学习与量子深度学习补充资料,QML,QeML,QaML - 29 2 月, 2024

- “现象意识”#2:用白盒的视角研究意识和大脑,会是什么景象?微意识,主体感,超心智,意识中层理论 - 16 2 月, 2024