今天我们接着上一讲“#9 生成对抗网络101 终极入门与通俗解析”, 手把手教你写一个生成对抗网络。参考代码是:https://github.com/AYLIEN/gan-intro

关键python库: TensorFlow, numpy, matplotlib, scipy

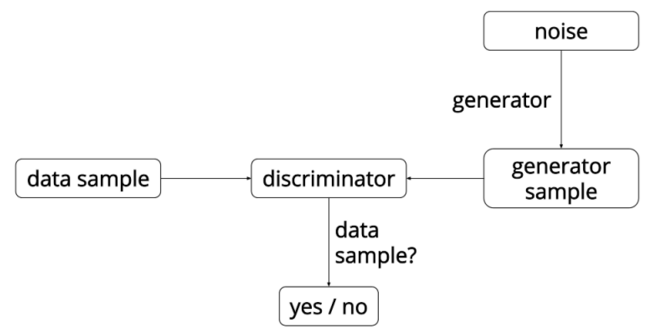

我们上次讲过,生成对抗网络同时训练两个模型, 叫做生成器和判断器. 生成器竭尽全力模仿真实分布生成数据; 判断器竭尽全力区分出真实样本和生成器生成的模仿样本. 直到判断器无法区分出真实样本和模仿样本为止.

上图是一个生成对抗网络的训练过程,我们所要讲解的代码就是要实现这样的训练过程。

其中, 绿色线的分布是一个高斯分布(真实分布),期望和方差都是固定值,所以分布稳定。红色线的分布是生成器分布,他在训练过程中与判断器对抗,不断改变分布模仿绿色线高斯分布. 整个过程不断模仿绿色线。蓝色线的分布就是判断器,约定为, 概率密度越高, 认为真实数据的可能性越大. 可以看到蓝线在真实数据期望4的地方,蓝色线概率密度越来越小, 即, 判断器难区分出生成器和判断器.

接下来我们来啃一下David 9看过最复杂的TensorFlow源码逻辑:

首先看总体逻辑:

正像之前所说, 有两个神经模型在交替训练. 生成模型输入噪声分布, 把噪声分布映射成很像真实分布的分布, 生成仿造的样本. 判断模型输入生成模型的仿造样本, 区分这个样本是不真实样本. 如果最后区分不出, 恭喜你, 模型训练的很不错.

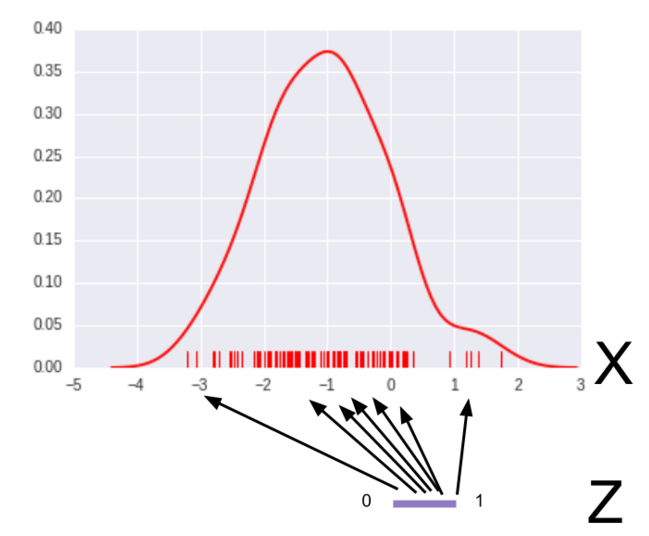

我们的生成器模型映射作用很像下图:

Z是一个平均分布加了点噪声而已. X是真实分布. 我们希望这个神经网络输入相同间隔的输入值 , 输出就能告诉我们这个值的概率密度(pdf)多大? 很显然-1这里pdf应该比较大.

Z如何写代码? 很简单:

class GeneratorDistribution(object):

def __init__(self, range):

self.range = range

def sample(self, N):

return np.linspace(-self.range, self.range, N) + \

np.random.random(N) * 0.01

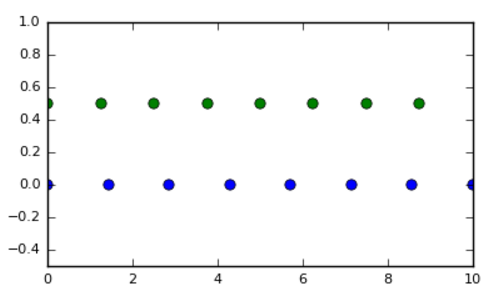

查不多采样值像下图:

只是多了一点点噪声而已.

生成器用一层线性, 加一层非线性, 最后加一层线性的神经网络.

判断器需要强大一些, 用三层线神经网络去做:

def discriminator(input, hidden_size):

h0 = tf.tanh(linear(input, hidden_size * 2, 'd0'))

h1 = tf.tanh(linear(h0, hidden_size * 2, 'd1'))

h2 = tf.tanh(linear(h1, hidden_size * 2, 'd2'))

h3 = tf.sigmoid(linear(h2, 1, 'd3'))

return h3

然后, 我们构造TensorFlow图, 还有判断器和生成器的损失函数:

with tf.variable_scope('G'):

z = tf.placeholder(tf.float32, shape=(None, 1))

G = generator(z, hidden_size)

with tf.variable_scope('D') as scope:

x = tf.placeholder(tf.float32, shape=(None, 1))

D1 = discriminator(x, hidden_size)

scope.reuse_variables()

D2 = discriminator(G, hidden_size)

loss_d = tf.reduce_mean(-tf.log(D1) - tf.log(1 - D2))

loss_g = tf.reduce_mean(-tf.log(D2))

最神奇的应该是这句:

loss_d = tf.reduce_mean(-tf.log(D1) - tf.log(1 - D2))

我们有同样的一个判断模型, D1和D2的区别仅仅是D1的输入是真实数据, D2的输入是生成器的伪造数据. 注意, 代码中判断模型的输出是“认为一个样本在真实分布中的可能性”. 所以优化时目标是, D1的输出要尽量大, D2的输出要尽量小.

此外, 优化生成器的时候, 我们要欺骗判断器, 让D2的输出尽量大:

loss_g = tf.reduce_mean(-tf.log(D2))

最难的难点, David 9 给大家已经讲解了. 如何写优化器(optimizer)和训练过程, 请大家参考源代码~

源代码:

'''

An example of distribution approximation using Generative Adversarial Networks in TensorFlow.

Based on the blog post by Eric Jang: http://blog.evjang.com/2016/06/generative-adversarial-nets-in.html,

and of course the original GAN paper by Ian Goodfellow et. al.: https://arxiv.org/abs/1406.2661.

The minibatch discrimination technique is taken from Tim Salimans et. al.: https://arxiv.org/abs/1606.03498.

'''

from __future__ import absolute_import

from __future__ import print_function

from __future__ import unicode_literals

from __future__ import division

import argparse

import numpy as np

from scipy.stats import norm

import tensorflow as tf

import matplotlib.pyplot as plt

from matplotlib import animation

import seaborn as sns

sns.set(color_codes=True)

seed = 42

np.random.seed(seed)

tf.set_random_seed(seed)

class DataDistribution(object):

def __init__(self):

self.mu = 4

self.sigma = 0.5

def sample(self, N):

samples = np.random.normal(self.mu, self.sigma, N)

samples.sort()

return samples

class GeneratorDistribution(object):

def __init__(self, range):

self.range = range

def sample(self, N):

return np.linspace(-self.range, self.range, N) + \

np.random.random(N) * 0.01

def linear(input, output_dim, scope=None, stddev=1.0):

norm = tf.random_normal_initializer(stddev=stddev)

const = tf.constant_initializer(0.0)

with tf.variable_scope(scope or 'linear'):

w = tf.get_variable('w', [input.get_shape()[1], output_dim], initializer=norm)

b = tf.get_variable('b', [output_dim], initializer=const)

return tf.matmul(input, w) + b

def generator(input, h_dim):

h0 = tf.nn.softplus(linear(input, h_dim, 'g0'))

h1 = linear(h0, 1, 'g1')

return h1

def discriminator(input, h_dim, minibatch_layer=True):

h0 = tf.tanh(linear(input, h_dim * 2, 'd0'))

h1 = tf.tanh(linear(h0, h_dim * 2, 'd1'))

# without the minibatch layer, the discriminator needs an additional layer

# to have enough capacity to separate the two distributions correctly

if minibatch_layer:

h2 = minibatch(h1)

else:

h2 = tf.tanh(linear(h1, h_dim * 2, scope='d2'))

h3 = tf.sigmoid(linear(h2, 1, scope='d3'))

return h3

def minibatch(input, num_kernels=5, kernel_dim=3):

x = linear(input, num_kernels * kernel_dim, scope='minibatch', stddev=0.02)

activation = tf.reshape(x, (-1, num_kernels, kernel_dim))

diffs = tf.expand_dims(activation, 3) - tf.expand_dims(tf.transpose(activation, [1, 2, 0]), 0)

eps = tf.expand_dims(np.eye(int(input.get_shape()[0]), dtype=np.float32), 1)

abs_diffs = tf.reduce_sum(tf.abs(diffs), 2) + eps

minibatch_features = tf.reduce_sum(tf.exp(-abs_diffs), 2)

return tf.concat(1, [input, minibatch_features])

def optimizer(loss, var_list):

initial_learning_rate = 0.005

decay = 0.95

num_decay_steps = 150

batch = tf.Variable(0)

learning_rate = tf.train.exponential_decay(

initial_learning_rate,

batch,

num_decay_steps,

decay,

staircase=True

)

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(

loss,

global_step=batch,

var_list=var_list

)

return optimizer

class GAN(object):

def __init__(self, data, gen, num_steps, batch_size, minibatch, log_every, anim_path):

self.data = data

self.gen = gen

self.num_steps = num_steps

self.batch_size = batch_size

self.minibatch = minibatch

self.log_every = log_every

self.mlp_hidden_size = 4

self.anim_path = anim_path

self.anim_frames = []

self._create_model()

def _create_model(self):

# In order to make sure that the discriminator is providing useful gradient

# information to the generator from the start, we're going to pretrain the

# discriminator using a maximum likelihood objective. We define the network

# for this pretraining step scoped as D_pre.

with tf.variable_scope('D_pre'):

self.pre_input = tf.placeholder(tf.float32, shape=(self.batch_size, 1))

self.pre_labels = tf.placeholder(tf.float32, shape=(self.batch_size, 1))

D_pre = discriminator(self.pre_input, self.mlp_hidden_size, self.minibatch)

self.pre_loss = tf.reduce_mean(tf.square(D_pre - self.pre_labels))

self.pre_opt = optimizer(self.pre_loss, None)

# This defines the generator network - it takes samples from a noise

# distribution as input, and passes them through an MLP.

with tf.variable_scope('G'):

self.z = tf.placeholder(tf.float32, shape=(self.batch_size, 1))

self.G = generator(self.z, self.mlp_hidden_size)

# The discriminator tries to tell the difference between samples from the

# true data distribution (self.x) and the generated samples (self.z).

#

# Here we create two copies of the discriminator network (that share parameters),

# as you cannot use the same network with different inputs in TensorFlow.

with tf.variable_scope('D') as scope:

self.x = tf.placeholder(tf.float32, shape=(self.batch_size, 1))

self.D1 = discriminator(self.x, self.mlp_hidden_size, self.minibatch)

scope.reuse_variables()

self.D2 = discriminator(self.G, self.mlp_hidden_size, self.minibatch)

# Define the loss for discriminator and generator networks (see the original

# paper for details), and create optimizers for both

#self.pre_loss = tf.reduce_mean(tf.square(D_pre - self.pre_labels))

self.loss_d = tf.reduce_mean(-tf.log(self.D1) - tf.log(1 - self.D2))

self.loss_g = tf.reduce_mean(-tf.log(self.D2))

vars = tf.trainable_variables()

self.d_pre_params = [v for v in vars if v.name.startswith('D_pre/')]

self.d_params = [v for v in vars if v.name.startswith('D/')]

self.g_params = [v for v in vars if v.name.startswith('G/')]

#self.pre_opt = optimizer(self.pre_loss, self.d_pre_params)

self.opt_d = optimizer(self.loss_d, self.d_params)

self.opt_g = optimizer(self.loss_g, self.g_params)

def train(self):

with tf.Session() as session:

tf.initialize_all_variables().run()

# pretraining discriminator

num_pretrain_steps = 1000

for step in xrange(num_pretrain_steps):

d = (np.random.random(self.batch_size) - 0.5) * 10.0

labels = norm.pdf(d, loc=self.data.mu, scale=self.data.sigma)

pretrain_loss, _ = session.run([self.pre_loss, self.pre_opt], {

self.pre_input: np.reshape(d, (self.batch_size, 1)),

self.pre_labels: np.reshape(labels, (self.batch_size, 1))

})

self.weightsD = session.run(self.d_pre_params)

# copy weights from pre-training over to new D network

for i, v in enumerate(self.d_params):

session.run(v.assign(self.weightsD[i]))

for step in xrange(self.num_steps):

# update discriminator

x = self.data.sample(self.batch_size)

z = self.gen.sample(self.batch_size)

loss_d, _ = session.run([self.loss_d, self.opt_d], {

self.x: np.reshape(x, (self.batch_size, 1)),

self.z: np.reshape(z, (self.batch_size, 1))

})

# update generator

z = self.gen.sample(self.batch_size)

loss_g, _ = session.run([self.loss_g, self.opt_g], {

self.z: np.reshape(z, (self.batch_size, 1))

})

if step % self.log_every == 0:

#pass

print('{}: {}\t{}'.format(step, loss_d, loss_g))

if self.anim_path:

self.anim_frames.append(self._samples(session))

if self.anim_path:

self._save_animation()

else:

self._plot_distributions(session)

def _samples(self, session, num_points=10000, num_bins=100):

'''

Return a tuple (db, pd, pg), where db is the current decision

boundary, pd is a histogram of samples from the data distribution,

and pg is a histogram of generated samples.

'''

xs = np.linspace(-self.gen.range, self.gen.range, num_points)

bins = np.linspace(-self.gen.range, self.gen.range, num_bins)

# decision boundary

db = np.zeros((num_points, 1))

for i in range(num_points // self.batch_size):

db[self.batch_size * i:self.batch_size * (i + 1)] = session.run(self.D1, {

self.x: np.reshape(

xs[self.batch_size * i:self.batch_size * (i + 1)],

(self.batch_size, 1)

)

})

# data distribution

d = self.data.sample(num_points)

pd, _ = np.histogram(d, bins=bins, density=True)

# generated samples

zs = np.linspace(-self.gen.range, self.gen.range, num_points)

g = np.zeros((num_points, 1))

for i in range(num_points // self.batch_size):

g[self.batch_size * i:self.batch_size * (i + 1)] = session.run(self.G, {

self.z: np.reshape(

zs[self.batch_size * i:self.batch_size * (i + 1)],

(self.batch_size, 1)

)

})

pg, _ = np.histogram(g, bins=bins, density=True)

return db, pd, pg

def _plot_distributions(self, session):

db, pd, pg = self._samples(session)

db_x = np.linspace(-self.gen.range, self.gen.range, len(db))

p_x = np.linspace(-self.gen.range, self.gen.range, len(pd))

f, ax = plt.subplots(1)

ax.plot(db_x, db, label='decision boundary')

ax.set_ylim(0, 1)

plt.plot(p_x, pd, label='real data')

plt.plot(p_x, pg, label='generated data')

plt.title('1D Generative Adversarial Network')

plt.xlabel('Data values')

plt.ylabel('Probability density')

plt.legend()

plt.show()

def _save_animation(self):

f, ax = plt.subplots(figsize=(6, 4))

f.suptitle('1D Generative Adversarial Network', fontsize=15)

plt.xlabel('Data values')

plt.ylabel('Probability density')

ax.set_xlim(-6, 6)

ax.set_ylim(0, 1.4)

line_db, = ax.plot([], [], label='decision boundary')

line_pd, = ax.plot([], [], label='real data')

line_pg, = ax.plot([], [], label='generated data')

frame_number = ax.text(

0.02,

0.95,

'',

horizontalalignment='left',

verticalalignment='top',

transform=ax.transAxes

)

ax.legend()

db, pd, _ = self.anim_frames[0]

db_x = np.linspace(-self.gen.range, self.gen.range, len(db))

p_x = np.linspace(-self.gen.range, self.gen.range, len(pd))

def init():

line_db.set_data([], [])

line_pd.set_data([], [])

line_pg.set_data([], [])

frame_number.set_text('')

return (line_db, line_pd, line_pg, frame_number)

def animate(i):

frame_number.set_text(

'Frame: {}/{}'.format(i, len(self.anim_frames))

)

db, pd, pg = self.anim_frames[i]

line_db.set_data(db_x, db)

line_pd.set_data(p_x, pd)

line_pg.set_data(p_x, pg)

return (line_db, line_pd, line_pg, frame_number)

anim = animation.FuncAnimation(

f,

animate,

init_func=init,

frames=len(self.anim_frames),

blit=True

)

anim.save(self.anim_path, fps=30, extra_args=['-vcodec', 'libx264'])

def main(args):

model = GAN(

DataDistribution(),

GeneratorDistribution(range=8),

args.num_steps,

args.batch_size,

args.minibatch,

args.log_every,

args.anim

)

model.train()

def parse_args():

parser = argparse.ArgumentParser()

parser.add_argument('--num-steps', type=int, default=1200,

help='the number of training steps to take')

parser.add_argument('--batch-size', type=int, default=12,

help='the batch size')

parser.add_argument('--minibatch', type=bool, default=False,

help='use minibatch discrimination')

parser.add_argument('--log-every', type=int, default=10,

help='print loss after this many steps')

parser.add_argument('--anim', type=str, default=None,

help='name of the output animation file (default: none)')

return parser.parse_args()

if __name__ == '__main__':

'''

data_sample = DataDistribution()

d = data_sample.sample(10)

print(d)

'''

main(parse_args())

参考文献:

- An introduction to Generative Adversarial Networks (with code in TensorFlow)

- Generative Adversarial Nets in TensorFlow (Part I)

David 9

Latest posts by David 9 (see all)

- 修订特征已经变得切实可行, “特征矫正工程”是否会成为潮流? - 27 3 月, 2024

- 量子计算系列#2 : 量子机器学习与量子深度学习补充资料,QML,QeML,QaML - 29 2 月, 2024

- “现象意识”#2:用白盒的视角研究意识和大脑,会是什么景象?微意识,主体感,超心智,意识中层理论 - 16 2 月, 2024

赞!

我们要欺骗判断器, 让D2的输出尽量大 ; loss_d = tf.reduce_mean(-tf.log(D1) – tf.log(1 – D2)) ;loss_g = tf.reduce_mean(-tf.log(D2)) ….. 这里不是很明白啊,作者可不可以再解释一下啊?

判别器和生成器的目标函数不同.

判别器目标是, 区分真实数据和伪造的生成数据.

而, 生成器的目标是, 让未来生成的数据更像真实数据, 所以, 它要使得D2的输出尽量大就可以了.

如还有问题, 请联系微信yanchao727727或者邮箱: yanchao727@gmail.com