第五届ICLR(ICLR2017)最近被炒的厉害,David 9回顾去年著名论文All you need is a good init,当时提出了一种新型初始化权重的方法,号称在Cifar-10上达到94.16%的精度,碰巧最近在看Keras。

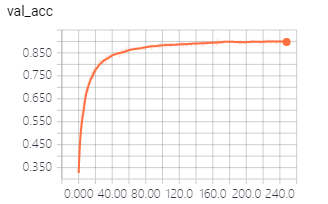

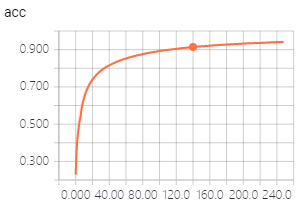

好!那就用Keras来还原一下这个Trick。效果果然不错,没怎么调参,差不多200个epoch,testing准确率就徘徊在90%了,training准确率到了94%:

如果你也想试试只需2步:

1. 导入论文的Keras初始化插件:

-

git clone https://github.com/ducha-aiki/LSUV-keras

2. 使用David 9还原的代码运行。注意:大约有15层卷积,最好考虑使用GPU。

废话少说,上代码:

from __future__ import print_function

import keras

from keras.datasets import cifar10

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Conv2D, MaxPooling2D, ZeroPadding2D, GlobalMaxPooling2D

from lsuv_init import LSUVinit

batch_size = 32

num_classes = 10

epochs = 1600

data_augmentation = True

# The data, shuffled and split between train and test sets:

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

print('x_train shape:', x_train.shape)

print(x_train.shape[0], 'train samples')

print(x_test.shape[0], 'test samples')

# Convert class vectors to binary class matrices.

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

model = Sequential()

model.add(Conv2D(32, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(32, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(32, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(48, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(48, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Conv2D(80, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(80, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(80, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(80, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(80, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Conv2D(128, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(128, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(128, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(128, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(128, (3, 3), padding='same',

input_shape=x_train.shape[1:]))

model.add(Activation('relu'))

model.add(GlobalMaxPooling2D())

model.add(Dropout(0.25))

#model.add(ZeroPadding2D((1, 1)))

#model.add(MaxPooling2D(pool_size=(2, 2)))

#model.add(Flatten())

#model.add(Dropout(0.2))

'''

model.add(Conv2D(512, (3, 3), padding='same'))

model.add(Activation('relu'))

model.add(ZeroPadding2D((1, 1)))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

'''

model.add(Dense(500))

model.add(Activation('relu'))

model.add(Dropout(0.25))

model.add(Dense(num_classes))

model.add(Activation('softmax'))

# initiate RMSprop optimizer

opt = keras.optimizers.Adam(lr=0.0001)

# Let's train the model using RMSprop

model.compile(loss='categorical_crossentropy',

optimizer=opt,

metrics=['accuracy'])

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255

x_test /= 255

model = LSUVinit(model,x_train[:batch_size,:,:,:])

tbCallBack = keras.callbacks.TensorBoard(log_dir='./Graph2', histogram_freq=0, write_graph=True, write_images=True)

if not data_augmentation:

print('Not using data augmentation.')

model.fit(x_train, y_train,

batch_size=batch_size,

epochs=epochs,

validation_data=(x_test, y_test),

shuffle=True, callbacks=[tbCallBack])

else:

print('Using real-time data augmentation.')

# This will do preprocessing and realtime data augmentation:

'''

datagen = ImageDataGenerator(

featurewise_center=False, # set input mean to 0 over the dataset

samplewise_center=False, # set each sample mean to 0

featurewise_std_normalization=False, # divide inputs by std of the dataset

samplewise_std_normalization=False, # divide each input by its std

zca_whitening=False, # apply ZCA whitening

rotation_range=0, # randomly rotate images in the range (degrees, 0 to 180)

width_shift_range=0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range=0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip=True, # randomly flip images

vertical_flip=False) # randomly flip images

'''

datagen = ImageDataGenerator(

featurewise_center=False, # set input mean to 0 over the dataset

samplewise_center=False, # set each sample mean to 0

featurewise_std_normalization=False, # divide inputs by std of the dataset

samplewise_std_normalization=False, # divide each input by its std

zca_whitening=False, # apply ZCA whitening

rotation_range=10, # randomly rotate images in the range (degrees, 0 to 180)

width_shift_range=0.2, # randomly shift images horizontally (fraction of total width)

height_shift_range=0.2, # randomly shift images vertically (fraction of total height)

horizontal_flip=True, # randomly flip images

vertical_flip=False) # randomly flip images

# Compute quantities required for feature-wise normalization

# (std, mean, and principal components if ZCA whitening is applied).

datagen.fit(x_train)

# Fit the model on the batches generated by datagen.flow().

model.fit_generator(datagen.flow(x_train, y_train,

batch_size=batch_size),

steps_per_epoch=x_train.shape[0] // batch_size,

epochs=epochs,

validation_data=(x_test, y_test), callbacks=[tbCallBack])

运行时,可以打开tensorboard查看实时训练:

tensorboard --logdir=Graph2 --host 0.0.0.0 --port 8888

上面是我的手稿代码,如果你觉得不满意,可以试着调参。如果有更好的改进方案和调参方案,请留言或联系我,把研究的快乐传递给大家~

参考文献:

- All you need is a good init

- http://machinelearningmastery.com/object-recognition-convolutional-neural-networks-keras-deep-learning-library/

本文章属于“David 9的博客”原创,如需转载,请联系微信: david9ml,或邮箱:yanchao727@gmail.com

或直接扫二维码:

The following two tabs change content below.

David 9

邮箱:yanchao727@gmail.com

微信: david9ml

Latest posts by David 9 (see all)

- 修订特征已经变得切实可行, “特征矫正工程”是否会成为潮流? - 27 3 月, 2024

- 量子计算系列#2 : 量子机器学习与量子深度学习补充资料,QML,QeML,QaML - 29 2 月, 2024

- “现象意识”#2:用白盒的视角研究意识和大脑,会是什么景象?微意识,主体感,超心智,意识中层理论 - 16 2 月, 2024